ControlDreamer: Blending Geometry and Style in Text-to-3D

BMVC 2024

* These authors contributed equally to this work, † Corresponding author

Abstract

Recent advancements in Text-to-3D technologies have significantly contributed to the automation and democratization of 3D content creation. Building upon these developments, we aim to address the limitations of current 2D lifting methods in achieving consistent and photorealistic 3D model editing. We introduce a novel approach by training a multi-view ControlNet on a carefully curated corpus of 100K refined texts. This multi-view ControlNet is then integrated into our editing pipeline, ControlDreamer, significantly enhancing the style editing of 3D models. Additionally, we present a comprehensive benchmark for 3D style editing, encompassing a broad range of subjects, including objects, animals, and characters, to further facilitate diverse 3D scene generation. Our comparative analysis reveals that this new pipeline outperforms existing 3D editing techniques, achieving state-of-the-art results as evidenced by human evaluations and CLIP score metrics.

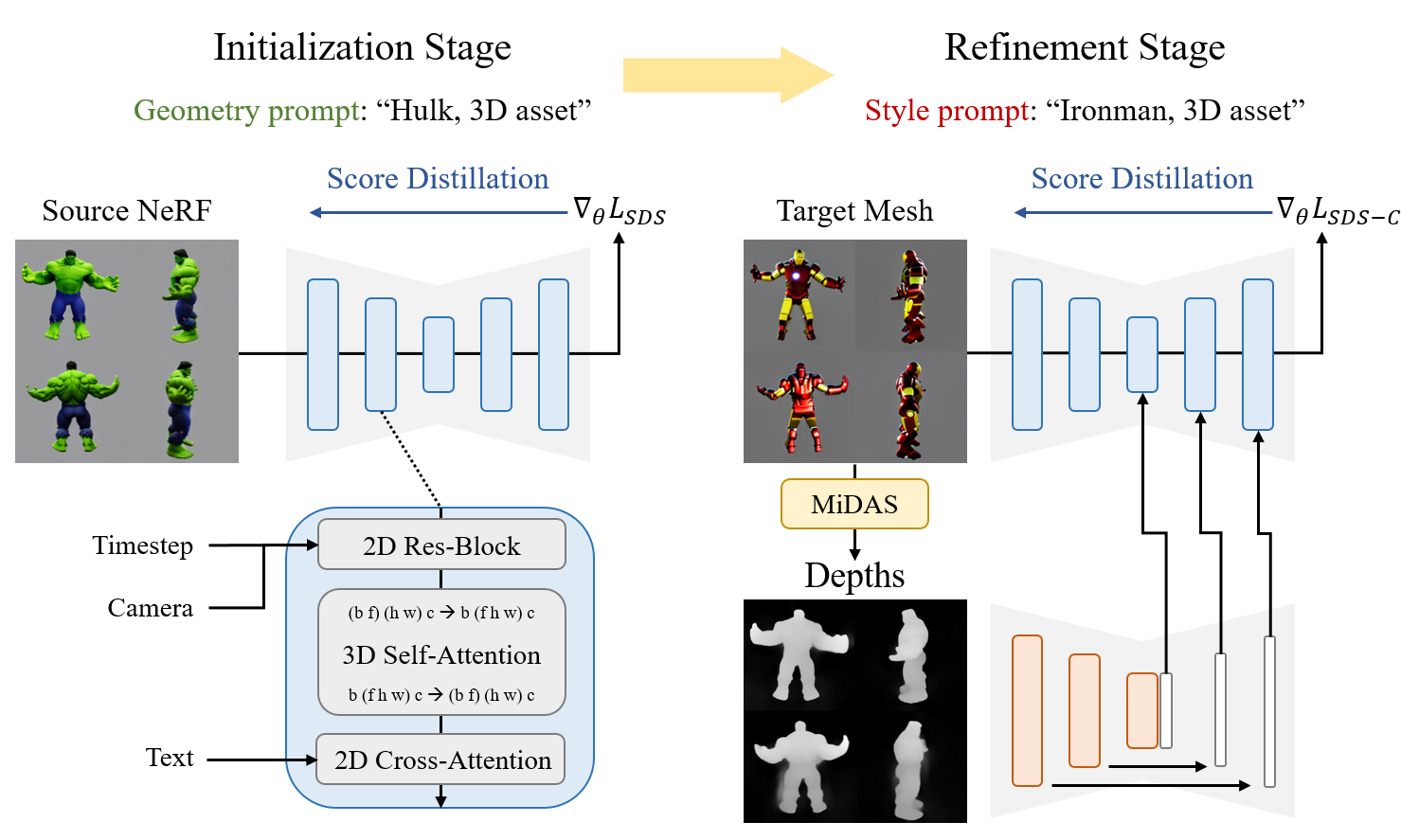

Overall pipeline

Our multi-view ControlNet generates 4 different views from corresponding depth-condited multi-view images. After initializing 3D geometry as a shape prior, it serves as a 3D style editor via a Score Distillation Sampling.

Demo Video of Controlled 3D Editing

Our multi-view ControlNet enables stylized 3D editing of the source 3D assets with Score Distillation.

Geometry prompt : A high-resolution rendering of a Hulk, 3d asset

Style prompt : A high-resolution rendering of an Iron Man, 3d asset ...

Example generated objects

ControlDreamer can edit the geometry 3D to better align with the given style text-guidance.

Here, we show the samples in the order of source geometry, proposed, baseline, and target style prompts.

Source

ControlDreamer

(Ours) MVDream (2-stage) MVDream

Hulk to Captain America

Source

ControlDreamer

(Ours) MVDream (2-stage) MVDream

British Shorthair to Lioness

* MV-ControlNet: Edited with our ControlDreamer with multi-view ControlNet. * MVDream: Edited without using ControlDreamer, MVdream only.

Counterfactual Generation

We also compared our results with GENIE of Luma AI for fun! We used this original source for blender rendering.

Tiger panda

* We initialized the geometry NeRF as Grizzly, and used style prompts as "tiger panda".

Convergences

We conducted experiments to validate the efficiency and effectiveness of our ControlDreamer and MVdream training procedures, each comprising 5000 steps.

Hulk to Spiderman

* While training, the upper row represents the results of MVDream and the below row represents the results of ControlDreamer.

Citation

@article{oh2023controldreamer,

author = {Oh, Yeongtak and Choi, Jooyoung and Kim, Yongsung and Park, Minjun and Shin, Chaehun and Yoon, Sungroh},

title = {ControlDreamer: Blending Geometry and Style in Text-to-3D},

journal = {arXiv:2312.01129},

year = {2023},

}